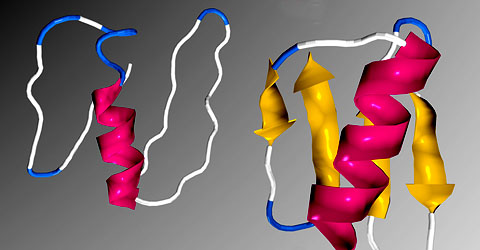

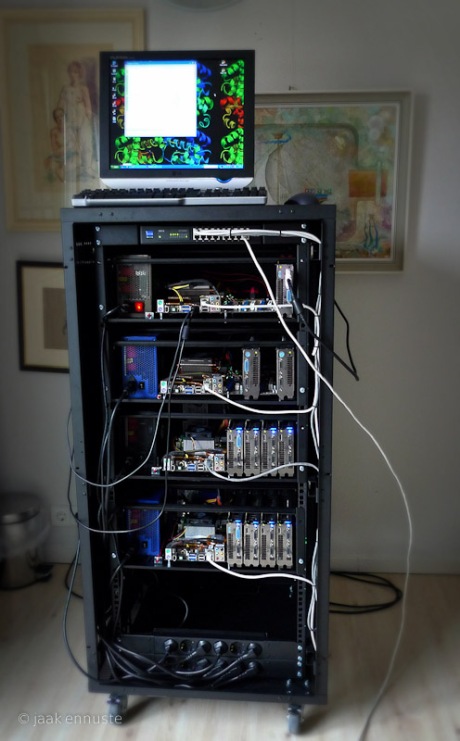

Our GPGPU supercomputer is GPU-based massively parallel machine, employing more than thousand parallel streaming processors. Using GPU-s is very new technology, very price- and cost-effective compared to old CPU solutions..

Performance (currently):

- 6240 streaming processors + 14 CPU cores

- 23,2 arithmetic TFLOPS (yes, 23 200 GFLOPS)

- Max theoretical PPD, hi-PPD WU’s = 190 000 (Folding@home, Fahmon)

11 GPU’s installed

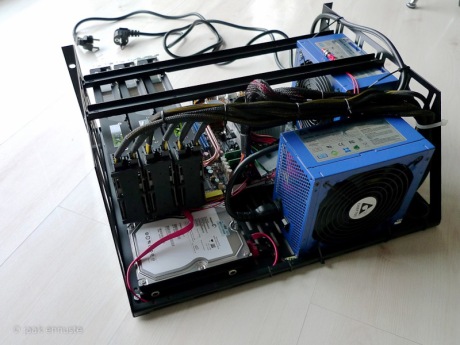

Typical 1 node configuration (nodes ED01 – ED04):

- AMD Phenom 8650 2,3 GHz X3 Triple-Core CPU

- MSI K9A2 Platinum motherboard with 4 x double-spaced PCI-X slots

- 4 GB RAM (Apacer DDR2 2 x 2 GB 800 Mbps modules)

- 4 x GTX295 double GPU cards (total 8 GPU’s per node)

- 2 x 850 W (total 1700W per node, two Chieftec CFT-850 Turbo Series cable management poer supplies synchronised)

- HDD 80 GB (WD 7200 RPM SATA)

- 1 x F@H SMP 6.23 beta client running in SMP mode

- 8 x F@H GPU 6.23 beta clients, running in individual GPU’s

- LogMeIn client

- MS Windows XP Pro 32-bit SP3

One module with 3 GPU’s installed.

All nodes are interconnected via Cisco rackmount switch. Power is distributed via 2 independent 240V rails.

Power consumption: 1100-1400 Watts

89 Comments

Comments RSS TrackBack Identifier URI

[…] plans for the future. Phase 1 and 2 are successfully completed. Architecture of Phase 4 is on the drawing board. It will be HPC (High Performance Cluster), using […]

[…] to say, its about 3800 x as powerful as my current computer doing 5,3 TFLOPS (0,0053 […]

Technically your system isn’t a MPP system because you are not running a single instance of the program using any sort of parallel algorithm across all of the GPUs.

Besides, I think that the stream processors is just really a marketing name for the underlying GPU architecture that they want to keep hidden away from prying eyes.

(I’ve not been able to find out exactly how many FPUs are under the GTX295).

If you want to see a MPP installation, go to your nearly supercomputing facility and watch them run a program across all of the nodes.

Otherwise, still very very cool!

Actually the underlying GPU architecture is well documented in numerous (scientific) publications. Each GTX295 has 2 GPU’s, each of which has 30 Multi Processors, each of which has 8 scalar Streaming Processors (each of which can do single-precision floating point (multiply-add and multiply, dual issue) and integer arithmetic). Each MP also contains a double precision floating point unit and 2 Special Function Units.

So 3 x 2 x 30 x 8 = 1440 single-precision arithmetic units. Each of these can at peak do 3 FLOPS per clockcycle.

I believe, that computer built like mine, is very much multiprocessor architecture computer. CUDA interface gives this power to cheaply programmers and scientists. True, problems, solved on this GPGPU computer, should be massivly parallel. Most of power-hungry problems, like weather forecasting, seismology and molecular modelling are. So, GPGPU clusters are the future, cause of much better price/performance and energy/performance indexes.

hello, where is your SLI cable?

This is non-SLI configuration. CUDA uses all streaming processors in parallel by itself.

Yes, for gaming, it very OK. But for folding You need more GPU streaming processors.

Jaak

Doenst each GPU need a CPU core to itself?, Would having 8 cpu cores increase the performance?

No, each GPU doesn’t need dedicated CPU. You may run freely 8 GPU system by chap dual-core CPU. All control logic needed, is built into NVIDIA card and controlled via CUDA interface.

Jaak

[…] Posts About our GPU supercomputerRussia rulesRunning 3 x NVIDIA GTX 295 CUDA system 5,3 TFLOPSGPU card for Folding to choose today32 […]

I was in awe… when i saw your configuration… its a machine i dream about. Then I saw the OS. I mean common… are you kidding me…

crazytrot, this is folding machine. folding is true work, not a show.

Well dear,

*You* have reduced it to a show by using windows.

And dont tell me about “true work”. I have done it on windows and on linux, so I know what you lose on windows.

I can only say that you should use linux, but if you dont, its your loss.

I do not want any further arguments. I rest my case.

Hi,

We’re about to appply for a little grant to build our first CUDA-based supercomputer, hoping for further grants to extend the installation in the future. I have a few questions:

1) Why did you chose “gaming” card, GeForce 295, and not Quadro or Tesla,

which are recommended by NVidia for scientific applications?

2) If you have 4 graphics cards, how can OS “know” which one controls the

monitor display?

3) Can one use different GPUs on a single motherboard? Say, 1 GeForce 295 for nice video display and three 4GB Teslas for memory-demanding supercomputing?

4) Why did you chose nVidia rather than ATI Radeons? Is this because of CUDA?

regards, ZK

1a. I chose Gaming gards because of lower price. In general they have exactly the same GPU-s inside

1b. I chose GTX295, because it’s double-GPU card, consistung 2 x 240 = 480 shader processors. Most powerful CUDA has only 240 processors in one card.

2. actually I have 8 graphics cards inside. I generally use always 1-st output of the first card (closest to CPU) and OR recognized the monitor.

3. Yes, You can. Actually all NVIDIA cards use exactly the same driver and CUDA version.

4. I chose protein folding in the Folding@Home project. In that particular task, ATI lags behind, despite of having very good numbers on the paper. Typical high-end card folds 2 times less than analogue NVIDIA card. Alsoo, current ATI cards require much more CPU power, NVIDIA cards put very little load on CPU.

Really impressive setup. I arrived to your site by looking for a good price/performance ratio.

I’m glad you concluded that GTX 295 is better over Tesla which is too highly priced for the same performance.

The only part I’m not sure is the rack space usage. Have you thought at a 1U or 2U architecture?

Also, please give an approximate cost of your supercomputer, the 1 case, 4xGPU, 2xPSU version.

Thanks,

John

Thanks for Your comment.

Actually GTX 295 is almost twice performance of Tesla’s most powerful card 🙂

Space usage is vastly improveable, I agree. This is kind of proof of concept supercomputer. I used off-the-shelf low-price components, std motherboards etc. Its possible to design 2U system with 4 GPU cards using cables, but cooling will be real challenge. Each card produces 200-250 watts of heat.

I will dedicate one post, analysing total cost, including electricity and possibly cooling. Stay tuned!

Jaak

Jaak

I appreciate the idea of using low-price components since if you get the same performance with less money, why not.

I would be tempted to see it 4 GTX295 would fit with this motherboard into a 2U with water cooling, using risers. They might not fit that well, but worth a try. I’m sure they will fit in a 3U configuration since they could fit even vertically.

For water cooling, do you know if anybody aggregated a full rack of computers to the same water cooling system?

And also, I wonder, isn’t there a rack level big powersource idea that could be used instead of 2 PSU per each computer?

Thanks,

4 x GTX 295 may fit into 1U by themselves. Actually NVIDIA did almost it, fitting 4 x Quadro (Tesla) cards into 1 U Tesla S1070 http://www.nvidia.com/object/product_tesla_s1070_us.html. There are several challanges:

1. Tesla didn’t fit motherboard into the system, it has tu use separate host system;

2. GTX295 consumes 100W over Tesla C1060 card, combined with motherboard, it will be very big challange to get 1,5 – 1,6 KW PS into 1U

3. Cooling will be issue. Tesla S1070 is air-cooled, but 1U fans look really noisy and un-efficient for me.

Jaak

About rack-level power supply. I tried to find some industrial 12V supplies, but had no luck. 2 PSU solution was cheaper, fault tolerant and efficient.

Jaak

So, in 2U you can fit a motherboard, CPU or CPUs, 1 HDD, 4 GPU and 2 PSU. Will be cramped.

Regarding cooling, I was looking for a rack mounted external cooling system and found it:

http://www.koolance.com/water-cooling/default.php?cPath=28_42

1300$ does look expensive but you can fill the rack with as many 2U or 3U units as you want and is reliable cause you’re sure they won’t burn out in time.

The budget of a rack will be with this density maybe 20-30% more then the aircooled solution.

In my case I’m looking for the most efficient high GPU density computers I can get. Budget is less an issue since I target financial applications. Reliability is definetly an issue.

Anyway, regarding cost, simply the replacement of S1070 with 4xGTX 295 means lower cost per performance.

Also, for water cooling, I was thinking water cooling at another level which can be less expensive if done DIY: fit the water cooling pump to an exterior aircon unit. Professionally there are exterior water chilling units, which this time need proper budgeting for number of servers.

Well done Jaak!

Thanks, Lauri. I had lecture it TTÜ and got very valuable feedback. Currently setting up CUDA massively parallel scientific applications work-group.

Jaak

Good day sir Jaak!

I’m deeply impressed in your system. Our laboratory plans to build a computing server and I plan to implement a similar configuration(if not an exact copy) of your system to build this computing server that we need.

I was curious though on the cooling system for your nodes. I read in tech sites that these four GTX cards emit an enormous amount of heat when at full load. And most of them discuss various cooling solutions for these GTX 295 cards (that includes water cooling and the traditional fans)

Is leaving them air-cooled at room temperature enough to keep them from melting? Or you have a special air conditioning unit to cool the room where the nodes reside?

thanks!

Hi James

Thanks for Your kind words.

Yes, cooling is one of the main issues, using 4xGTX GPU’s. Each emits about 200W of heat, total >1 KW heap per node including chipset, CPU and PSU’s. GTX295 has quite good air cooling. My rack is built so, that GTX cards push 90 degree C air out from rack.

I live in Estonia and still run this rig at home. I dont have AC, but as outside temperature is still 15 degrees and so, I’m able to run this system w/o AC. But in near future I’ll find some cooled server room for my rig.

Plese find more about cooling here: https://estoniadonates.wordpress.com/2009/04/04/32-gpu-folding-rig-cooling-diagram/

Jaak

Nice rig Jaak!

But how did you put together 2 PSUs to working on 1 pc? How they are wired / synchronized ?

Thanks!

Plamen, Yes, I use 2 PSU arrangement. More efficient, less cost per watt. You need mod or special cable to achieve it. I have post devoted to 2 PSU: https://estoniadonates.wordpress.com/2009/04/02/dual-psu-pc-system/

[…] Posts About our GPU supercomputerMy lecture in Tallinn Tech University about GPGPURunning 3 x NVIDIA GTX 295 CUDA system 5,3 […]

“My rack is built so, that GTX cards push 90 degree C air out from rack.” 90C? really? is that a typo? What kind of inlet temps are you running this at? 15c? and then it raises to 90c from the outlets?

I never measures exact temp, but about so. Cards temp is 85-95 degrees and outcoming air quite hot.

I looked up the gpu water block topic, and there are probably 10 companies making them, and they all seem to support multiple cards feeding into each other. For instance, with two dual-chip cards: http://www.ocforums.com/showthread.php?t=601824 and three: http://www.custompc.co.uk/blogs/mremulator/2008/12/11/overclocked-orange/

In addition, they can provide a single hard link to join them together, for instance: http://www.sidewindercomputers.com/gptr1.html

now I suspect it is possible to do this with adjacent slots and some smart water block engineering. one could email all of the different companies to see!

I’m still quite in between of air- and water-cooling. What I heard, that water-cooling holds temperatusres 30 C degrees (!) lower, compared to air cooling. I have many cards RMA’d and I think it’s because of cruelling temps. Still, water-cooling is very pricey and not so easy deployable in rack systems.

Thanks for links posted, good to have them.

Jaak

I agree, water cooling is a big hassle with lots of extra problems and cost. But if you want more density, water cooling is the only way, I think, maybe! ! 🙂

PS: I looked for regular cases that would work for this, and the best I can find is something that doesn’t require much modification: http://www.lian-li.com.tw/v2/en/product/product06.php?pr_index=311&cl_index=1&sc_index=25&ss_index=61&g=d

You replace the top cover with this: http://www.lian-li.com.tw/v2/en/product/product06.php?pr_index=295&cl_index=2&sc_index=10&ss_index=74&g=f

Remove the front hard drive rack and replace it with this: http://www.hwlabs.com/index.php?option=com_content&view=article&id=19&Itemid=21

Cool the cpu with a big heatsink and put the second psu in the optional top bay. Pump and reservoir fit on the bottom. Top and back fans blow out, front fans blow in. I believe this will JUST handle 1200w. This hardware is very new, so this is just a guess.

[…] machine within reach of a hobbyist. I’d be happy to try to implement an AI on some recent home-built supercomputers, at least as far as the raw MIPS was […]

Great set up.

Would like to know how much did the rig cost in total ?

1)could you run games on cuda?

2)What about running multiple monitors?

t2itsa put…!!! 3dmarki 06 pole selle vidina peal jooksutanud w???

See masin pole optimeeritud 3dmarkile, kuna GPU-d ei tööta SLI-s. CUDA skaleerib need ilma SPI-ta. Lisaks peaks kasutama hea 3dmarki saamiseks i7 protsessorit. Kuna käesolev masin kasutab enamjaolt GPU võimsust, siis pole CPU maailma tugevaim.

Rahul, cost of the rig is about US 10 000. You van use 8 monitors with each node, but this is not the meaning of this rib, but using power of GPUs for solving complect parallel tasks in molecular dynamics area.

yes, whole idea was to get max GFLOPS from off-the shelf cheap components…

Mis sa taga nagu teha saad, mingi Coreli või ntx 3D Max-i jaoks kasutada rendrerdamises? Või kuda sa neid ära kasutad?

Ma kasutan seda massiivsete paralleelsete teadusarvutuste jaoks. Hetkel aitan Stanfordi ülikoolil leida ravimeid. Arvutab molekulaardünaamika ülesandeid.

Jaak

Mis moodi see protsess üldse välja näeb? mingid kindlad softid vms? Ja kui ta neid arvutab kas siis ta tõmbab ikka päris kooma ja võtab tükk aega ennem kui tehtud saab?

It’s impressive work and I am considering the same hardware. Your construction is definitely the winner, but I am also considering 275 with 1.8GB memory (just came out) which is half the price of 295 but with same memory size.

The problem is that I have to exchange boundary data for my application (FDTD).

If I use 8 GPU, that would mean that I will have to transfer twice as many data over the total bandwidth of PCI-E (as 8 segents compared to 4 segments for 275).

Thus with 4 x 295 I will get tice cores : 8 GPU with 8 x 240 coes), but twice the data transfer.

The other option with 4×275 I will get 4×240 cores but half the bandwidth, possibly less than the total bandwidth of PCI-E.

Can you give me any suggestion or ball-park guess for my concerns?

Many thanks in advance,

Best regards.

Hi nkpark

Glad to hear, that You consider to start using immense power of gpgpu technology.

Main difference between 275 and 295 is number of GPU-s. GTX275 is single GPU card and 295 is double GPU model. This physical advantage makes possible to have double of GPU-s on same system board. In other words, its possible to achieve almost double TFLOPS performance per one system.

Considering data transfer models, it depends on the character of Your exercise. Good GPGPU application is optimised for local DDR5 onboaard ram and trying to avoid global RAM accesses. So, it all depend how well the specific computational algorithm is scalable.

Jaak

NVIDIA does not have (G)DDR5. Just GDDR3. BTW, I can offer high quality, silent but powerful 17cm diameter fans for extra cooling. They are meant to work on 24V but run fine and silent at 12V. You know where to find me 🙂

I’m looking at the K9A2 / Phenom 8650 for a build, how much ppd with the 8650? Any difficulties?

Triple-core AMD is very good choice. Your total PPD depends more on GPU-s used. CPU gives only a small fraction, say 1500 PPD. Under the load of 8 GPU-s it may drop to 700-900 PPD, but together with GPU-s around 50 000 PPD.

Yesterday I found my friend constructed a i70 based x 3 295 machine for FDTD.

I will soon construct Pheonom based x4 275 (total 4 x 1.8G GPU mem) – half price of above system based on i7 and 295 (same memory, less GPU but also less comm overhead through PCI-E).

To find out the most efficient machine for $, My friend I decided to test 4 different configurations for our FDTD, to find out any relation with borad to borad dependence of PCI-E, as well as data transfer issues related to our memeory/data transfer dependent problem. I will post the result here.

Well, of course, for processor dependent problem, there are reports, and as evidenced by Jaak’s beatutiful machine, AMD and 295 would be the best choide. ^^;

You may see some PPD examples in this folder configurations list:

http://foldingforum.org/viewtopic.php?f=38&t=7902

Eestis on 6000 protsessoriga superarvuti!…

GPU-de baasil ehitatud 6000 protsessoriga superarvuti, mille võimsus on 23 TFLOP-si. Igaüks saaks sellega hakkama, kui raha oleks….

Kool config jaak!

keep movin 😀

Beautiful setup. Quick question, did you make the exposed motherboard carrier or buy it? I am using cheap PC cases and cutting out the side panels to assist in air flow.

Sean. Motherboad Carriers are from Middle Atlantic:

http://www.middleatlantic.com/rackac/storage/shelves2.htm#2

some questions too…

1. what is manufacturer of your Video Cards? EVGA, BFG or … and why?

2. if it’s 3U or 4U Motherboad Carriers? are they in stocks somewhere in Estonia?

I use Palit, XFX and GigaByte cards.

I had to order shelves from http://www.middleatlantic.com/ . Clamping shelves allow to fix video cards firmly.

Jaak

I hereby, as promised ealrier, report our result on FDTD testing for two different macnine..

i70 based x 3 295 machine (total 3 x 2G = 6G MEM, 6,000$) vs

Pheonom based x4 275 (total 4 x 1.8G = 7.2 G MEM, 3,000$)

Findings :

1. Not much depedence on the choice of CPU, as expected..

2. 4 x 295 operation was not easy (actually was not possible, for our case)

3. 3 x 295, heat problem was much serious than 4 x 275 (1,8G version) 90C vs 75C

4. Memory bandwidth was not a big problem for both of the cases.

5. Speed difference was there, with the no. of GPU 6×285 vs 4×275, & as 285 faster

6. Cost vs performance about same, but over hours of running, too much heat for 295.

Hope that it would help others, as I benefited from here.

On the other hand, the Tesla machine from my other friend was even slower than 275!

Best,

First of all , I would like to tell you that your system is very impressive. Though I am not very clear on CUDA installation. I mean SLI is simple just using 2-way or 3-way SLI bridge. If not using SLI mode then how you tell that all 3 x 295 shall participate in CUDA . Is this option available in nvidia control panel or is it need to be done differently.

I think by defalt GTX 295 is 2-way SLI ( dual GPU ) . Can I make a single 295 to participate in CUDA ( not SLI ), If yes will it be more performace oriented than SLI??

I do understand max you can have Quad SLI and if you have more GPUs then may be only way is CUDA and not SLI. I just want to know in general , if SLI is more prformance oriented or CUDA thinking of only 4 GPU’s .

I am sorry , If my questions are too novice for this discussion

Rajiv,

CUDA and SLI is orthogonal. SLI have to be disabled to run CUDA. Also, you can run only one GPU in 295, or two GPU at the same time with MPI programming over CUDA. The performance, # of GPU scales so nicley to the performance. !!!

You are right. CUDA works with non-SLI setup only.

Jaak

How did you mount the Motherboard to the rack shelf? Aren’t the holes offset?

Holes are offset, I frilled to the right places and used brass spacers as PC cases do to hold motherboard.

Jaak

fyi this is out:

5.Maximum combined current for the 12 V outputs shall be 110A.

Click to access EN-ST1500-Manual.pdf

Click to access ST1500_cable_define.pdf

Including four modular 8+6 pin pcie cables!

Yet another! http://ultraproducts.com/product_details.php?cPath=97&pPath=632&productID=639

117A max along +12v. But only two 8 pin pcie cables from the look of it.

Hi, I’ am Mattia from Italy.

I have a question. The four GTX295 put together on the same motherboard must be identical? If one fails, i must change all, or I can change only one failed??

Hi Mattia

No, they can be from different makers and from different brands. I have personal experience having 2 Palit and 2 GigaBytet boards in the same system

cheers

Jaak

thank you very much for your answer!!!

bye

mattia

Greetings, this is a most impressive system. I have really appreciated following the work. For your information RenderStream offers UL compliant GPGPU workstations and rackmounts using 4XGTX295 and 4XTesla systems (including in the Fermi upgrade program) with Linux or Windows XP or dual boot. Systems are built in an iso9001 compliant facility, OS and Cuda are installed, tested and then burned-in at 94 degrees Fahrenheit for 24 hours. Systems come with standard parts and labor in the USA and full on site warranties in the USA and internationally.

Now for my question we are looking for commercial PCIeX16 extension cables and, separately, ruggedized adapter cables for a portable tesla cluster we are building. Do any of you have a trusted vendor with whom you could put me in contact. Thank you

Hi,

Nice information. I do have a few questions. Have you made any comparisons of GTX295 vs Tesla with CUDA code? Any opinions of Tesla’s support of double precision math and GTX 295 does not? I would tend to lean towards the Tesla if we need the enormous amount of memory — 4 GB vs 896 MB. I think that is the real cost behind these cards.

GTX295 are significantly faster than Tesla but we do not have exact numbers though our customers say the 295 cards are nearly twice as fast. Double precision works for both cards the GTX295 is 149 GFLOP and the C1060 is 78, which for the latter is nearly twice as fast of the newest Nehalem CPU. Fermi will make about an 8X improvement on double precision relative to Tesla though just a slight improvement for single precision. Here is a nice article that you may have interest in reading from BFN*

http://www.brightsideofnews.com/news/2009/11/17/nvidia-nv100-fermi-is-less-powerful-than-geforce-gtx-285.aspx

If your interest is double precision then the Fermi cards are the way to go and for HPC the Tesla will best the game cards in that they can maintain higher sustained computational rates and they have full ECC.

your time is soon to come http://twitter.com/NVIDIAQuadro/status/8215272895

Nice setup.

What is the computer case or housing that is shown in the second picture and where did you get it from?

Middle Atlantic shelves

more here https://estoniadonates.wordpress.com/2009/02/19/19-20-u-rack-ordered/

This pain relief medication is your sure chance for normal life without pain, hospitals & infusions! That’s what i want to say here.

13 июл 2008 Самые разные теории здорового питания, их достоинства и недостатки. Диеты, рецепты различных блюд.

Правильно подобранное лечебное питание, (лечебные диеты) может значительно облегчить течение заболевания, предупреждать возможные обострения.

Коллекция диет, рецепты блюд здорового питания, материалы о фитнесе, йоге, таблицы калорийности продуктов, счетчики индекса массы тела, общение на темы

12-09-2008 / 6425 просмотров / Питание, диеты 15-07-2008 / 10253 просмотров / Питание, диеты 27-06-2008 / 17382 просмотров / Питание, диеты

Диетическое питание. Средства для похудения, диеты, похудеть быстро. Советы и рекомендации.

I am very happy to read this. This is the type of info that needs to be given and not the accidental misinformation that’s at the other blogs. Appreciate your sharing this best doc.

Wow that was odd. I just wrote an extremely long comment but after I clicked submit my comment didn’t show up. Grrrr… well I’m not writing all that over again. Anyways, just wanted to say wonderful blog!

As I look at the major courses for both it seems that I can take screen writing and film courses as my general major elective as a English major. When I choose a minor which can be creative writing or film studies, I’m having a hard time because I want to pursue a career as a screenwriter for film as a secondary job for myself, but wouldn’t Creative Writing help me with that?.

What is a good free blogging website that I can respond to blogs and others will respond to me?

I intended to write you one little note in order to give thanks yet again over the wonderful information you have shared above. It’s so shockingly generous with you to convey openly precisely what most of us might have distributed for an ebook to generate some bucks for themselves, mostly given that you could have tried it in case you desired. These good ideas additionally served to become fantastic way to be aware that most people have similar passion really like my personal own to realize somewhat more regarding this problem. I think there are a lot more pleasant opportunities ahead for individuals who browse through your blog.

Very good article. I will be going through a few of these issues as well.

.

“directory”

“[…]1 very nice put up, i actually love this blog, carry on it so[…]”

“blogg”

“[…]g There is noticeably a bundle to know about this. I assume you made particul l3[…]”

Do you want to copy articles from other blogs rewrite them in seconds and post

on your blog or use for contextual backlinks? You

can save a lot of writing work, just search in gogle:

rheumale’s rewriter

My partner and I are developing a Joomla website for a certain project. We are using separate computer and separate hosts (localhosts). My partner has made changes to the graphical design and database structure so I have copied the directories (folders) to my computer. In doing such, I have encountered this error message “Database Error: Unable to connect to the database:Could not connect to MySQL”. Please help. Thanks :)).

If you are interested in topic: earn money online in sri lanka

free – you should read about Bucksflooder first

always i used to read smaller articles or reviews that as well clear their motive, and that

is also happening with this paragraph which I am reading at this place.

Message from 11 years in future.

A mobile phone comes with 125 gigaflops now in 2020.