CUDA against influenza

NVIDIA v.s. ATI in Folding

We all know, that ATI is very good on paper in terms of theoretical TFLOPS and also very good in gaming and 3d graphics in general. Still, in folding, ATI lags badly behind NVIDIA. Many folders, using ATI cards, are looking forward to Directx 11 and new drivers, hoping to increase their performance. Possibly itss wishful thinking and NVIDIA is still be strong due to architecturaal differences. Here are few very good threads, analyzing this issue:

http://www.hardforum.com/showthread.php?p=1034740407

http://forum.beyond3d.com/showthread.php?t=50539

Shortly, ATI doesn’t have memory near GPU shaders to perform more complicated tasks (as folding) than graphics processing. NVIDIA has more sophisticated multi-level memory architecture, allowing to solve more complex tasks at GPU shader level.

If You ignore all the flaming here, the final thought is here:

Vaulter98c [H]ard|Gawd, 1.4 Year:

The problem isn’t that ATI GPUs can’t store “enough” data, it’s that they aren’t storing “any” data at all right now since F@H doesn’t use the LDS. And a single step of a single GPU workunit doesn’t require a particularly large amount of data storage, especially not with the small proteins that are currently being used for most of the workunits that are in the wild right now. Each shader unit (set of 4 standard FPUs and one special-function unit) has a 16KB LDS in RV770 and 32KB in RV870, which is more than enough to give a significant performance boost to overall work production speed.

Also very good (illustrated) blogpost about ATI GPGPU issue:

http://theovalich.wordpress.com/2008/11/04/amd-folding-explained-future-reveale/

Please enjoy!

ADM not to improve folding performance with new models

AMD was the first video chip producer , who supported the client part of folding@Home project. It was possible to execute those calculations by AMD video chip since September 2006. NVIDIA video cards joined the pack only in the summer of 2008.

Associates report that increase in the speed of folding@Home project in proportion to update of radeon model number almost ceased. In particular, Radeon HD 4870 and Radeon HD 4870 X2 demonstrates close results, and newest video card radeon HD 5870 with 1 Gb GDDR- 5 memories in this sense differed a little .

As experts explain, problem consists in the fact that the client program for Folding@Home is written in Brook+, and the new generation of AMD video card with directX 11 support counts on OpenCL. Thus developers will not renew the part client Of folding@Home, the use of NVIDIA card will provide higher results. Let us note that in other applications types GPGPU video chip AMD does not demonstrate a similar delay, this only a special case.

from Xtreview.

Nvidia GT300 Fermi latest tech data

Based on information gathered so far about GT300/Fermi:

- Transistors ca 3 billion

- Built on the 40 nm technnology process

- 512 shader processors (cores)

- 32 cores per core cluster

- 384-bit GDDR5 memory

- 1 MB L1 cache memory, 768 KB L2 unified cache memory

- Up to 6 GB of total memory, 1.5 GB expected for the consumer product

- Half Speed IEEE 754 Double Precision floating point

- Native support for execution of C (CUDA), C++, Fortran, support for DirectCompute 11, DirectX 11, OpenGL

3.1, and OpenCL

3.1, and OpenCL

Based on techPowerUp news

Read today good article about DirectCompute API and other…

Read today good article about DirectCompute, OpenGL, DirectX 11, NVIDIA Cuda, ATI Firestream and other hot topics:

http://www.theregister.co.uk/2009/09/28/microsoft_nvidia_collaboration/

Most powerful GPU from ATI — Radeon HD 5800

Up to 2,72 TFLOPS per card, new ATI Radeon HD 5800, fully Win 7, OpenGL supported and DirectX 11 compatible. This is the firs card to support Directcompute 11 standard. As we know, GT300 from NVIDIA will not be ready before December this year. So, ATI got some 4-5 month lead in next get DirectX 11 product development.

Until now, ATI has not performed well in Folding@Home, delivering lower performance levels per dollar as NVIDIA. Hope to see change in this area also.

Directcompute 11 is Microsofts application programming interface (API) that takes advantage of the massively parallel processing power of a GPU to accelerate PC application performance in Microsoft Windows Vista or Windows 7. DirectCompute is part of the Microsoft DirectX collection of APIs. This means, that ATI Radeon HD 5800 equipped PC fill be significantly faster in certain tasks, cause it’s streaming processors are used in parallel with PC-s main processor.

New 80-core CPU machine from SGI

As You see from this image, new SGI Octane III has 10 blades, each 2 x 4-core Intel 5500 series CPU’s, total 80 cores per on small rack. Pretty neat supercomputer. All Blades are interconnected by Gigabit Ethernet or InfiniBand. Supercomputer supports Red Hat or Suse, SGI Isle cluster manager and Altair PBS patch scheduler.

As You see from this image, new SGI Octane III has 10 blades, each 2 x 4-core Intel 5500 series CPU’s, total 80 cores per on small rack. Pretty neat supercomputer. All Blades are interconnected by Gigabit Ethernet or InfiniBand. Supercomputer supports Red Hat or Suse, SGI Isle cluster manager and Altair PBS patch scheduler.

more: Gizmodo

GT300 announcement in December?

GT300 announcement is going to be in December – NVIDIA head during October will visit TSMC for checking the readiness degree of this video chip for mass deliveries according to several sources.

NVIDIA will simultaneously discuss the prices of 40 nm production , which is released by TSMC. During July the last company was possible to raise the release level of suitable 40 nm chips from 30% to 60%.

GT300 will be the most powerful CUDA chip ever. Based on technicad data about NVIDIA GT300 shader units frequencies, memory speed and other, people fom Hardware-Infos (Germany) have decided, that G300 performance will be 2,4 TFLOPS. It’s more than twice GTX280 cards 0,933 TFLOPS. Comparision is not direct, as G300 supports MIMD architecture, but GTX280 only SIMD. GPU is rumoured to have rather tremendous die area of 452mm², which means that only about 104 – 105 of such dies can fit onto one 300mm wafer.

Nvidia received first working samples from TSMC (Taiwan Semiconductor Manufacturing Company) in the beginning of September.

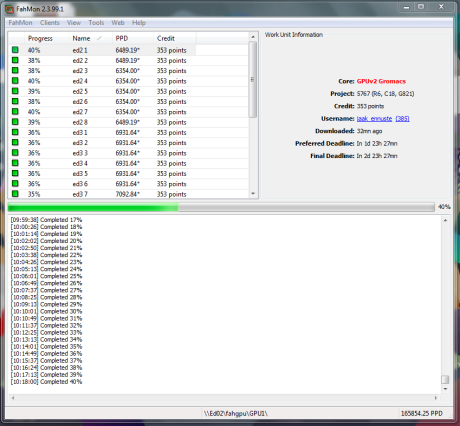

Restarted my GPU cluster

After long summer vacation I restarted my GPU cluster @ 166K PPD.

ca 165 000 - 166 000 PPD